A collaborative project combining millions of images and artificial intelligence

to develop a tool for the automatic recognition of the European fauna in camera-trap images

Camera traps have revolutionized the way ecologists monitor biodiversity and population abundances. The full potential of camera traps is however only realized when the hundreds of thousands of images collected can be quickly classified, ideally with minimal human intervention. Machine learning approaches, and in particular deep learning models, have recently allowed extraordinary progress towards this end. There is however no model available that can, currently, automatically identify the French fauna in camera-trap images.

The DeepFaune initiative aims to fill this gap.

DeepFaune is a large-scale collaboration between dozens of partners involved in research, conservation and management of wildlife in France, to (1) build the first and largest dataset of annotated camera-trap images collected in France, and (2) train successful species classification models using deep learning approaches.

We also provide the community with a free, user-friendly and cross-platform software to use the models developed, so that anyone can run our best models on his/her images on a personal computer and classify his/her camera-trap images or videos.

Data and Model

A large training dataset

The success of our collaboration with our partners has allowed us to gather over a million annotated pictures, likely representing the largest database of this kind in France.

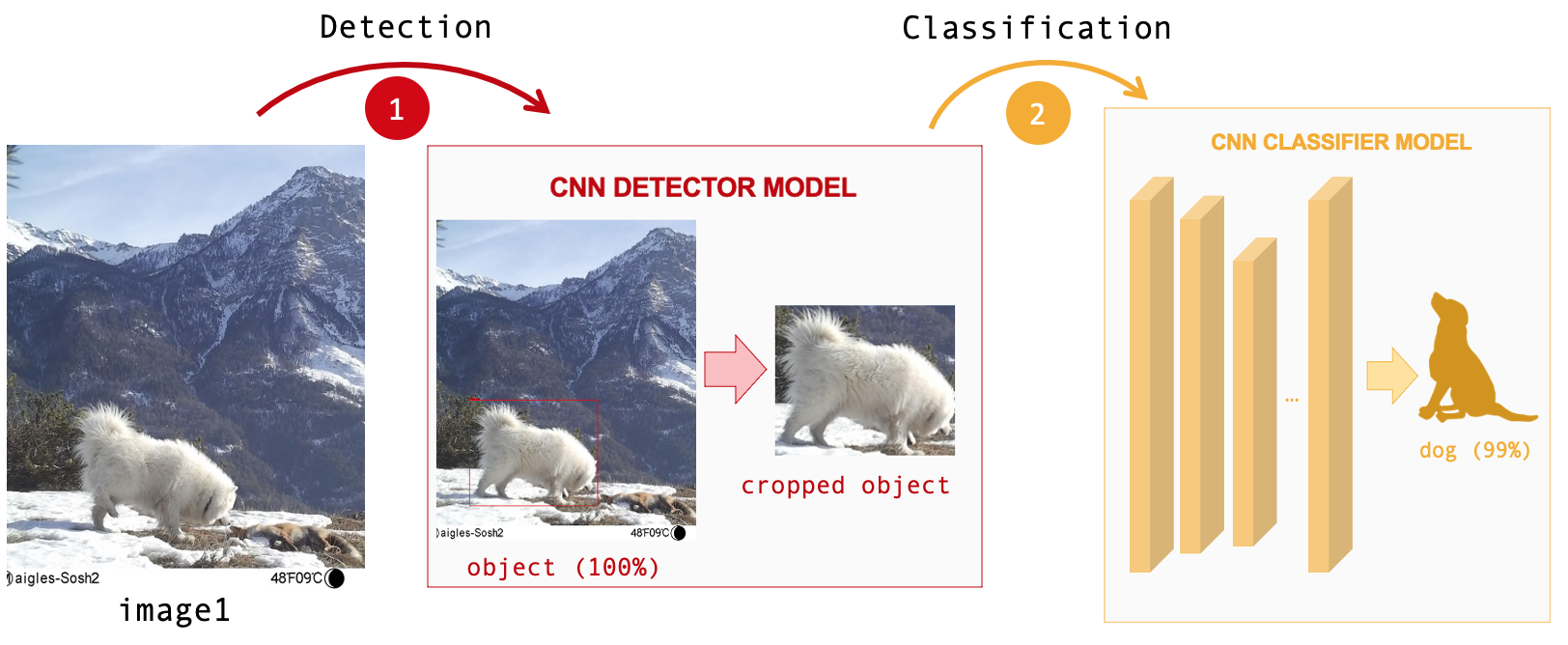

A two-step approach

Our approach is based on two models: 1) a detection model identifies whether an animal/human/vehicle is present in the image, as ’empty’ images are often collected due to false triggers; 2) a classification model predicts to which class (i.e. species or higher taxonomic group) the animal detected belongs to.

Species classified and model performance

Currently, the model uses 37 classes. Each image or video that is submitted, taken by day or by night, is predicted to show either:

- nothing: class ’empty'

- a human presence: classes ‘human’, ‘vehicule’

- an ungulate: classes ‘bison’, ‘chamois’, ‘fallow deer’, ‘ibex’, ‘moose’, ‘mouflon’, ‘red deer’, ‘reindeer’, ‘roe deer’, ‘wild boar’

- a different large mammal: classes ‘bear’, ’lynx’, ‘wolf’, ‘wolverine’

- a smaller mammal: classes ‘badger’, ‘beaver’, ’lagomorph’, ‘genet’, ‘hedgehog’, ‘marmot’, ‘mustelid’, ’nutria’, ‘otter’, ‘racoon’, ‘red fox’, ‘squirrel’

- a domestic animal: classes ‘cat’, ‘cow’, ‘dog’, ’equid’, ‘goat’, ‘sheep’

- something else, within classes ‘bird’ or ‘micromammal’

Images for which the confidence score is below a user-defined threshold are considered ‘undefined’, and should be inspected visually to be classified.

However, for many species predictions are right more than 95% of the time! (results per species here).

Try the software on your own images - and let us know how it performs!

The quality of the predictions improve regularly, as we work on the model and add images to the training database. If you have classified camera-trap pictures or videos, you can help us to improve the model. Contact us to contribute!

You want to learn more about our work?

You could also be interested by:

Software

We provide a free-to-use, user-friendly software to run the DeepFaune model to classify camera-trap pictures or videos. The software returns a spreadsheet with the classifications, but also allows to copy or move the pictures or videos within distinct folders according to the classifications.

This software has been designed to be used on a standard computer and does not require to send pictures or videos to a distant server.

– Download and use –

- Windows:

- Download the compressed folder here, and uncompress it where you want. This folder can be deleted once the installation has been completed (step 2).

- Install by double-clicking on the

deepfaune_installer.exefile. - Launch the DeepFaune software like any other Windows software.

- Linux/Mac:

- Download the compressed folder here.

- Uncompress the folder at an easily accessible place, as you have to access it each time you launch the software.

- Follow the instructions provided in the

README.mdfile.

The source code is here: https://plmlab.math.cnrs.fr/deepfaune/software/

For help or suggestions, contact us

Partners

The DeepFaune initiative brings together more than 60 partners, all involved in research, conservation or management of biodiversity: regional or national parks, hunting federations, naturalist associations, research groups and even individuals.

These partners are actively taking part in the project, by contributing camera-trap images or videos to our growing database, and participating in defining the project’s aims and user needs.

Want to join the DeepFaune project? Contact us!

Our team

The DeepFaune team is also made up of Gaspard Dussert (Laboratoire de Biométrie et Biologie Evolutive (LBBE), Lyon), Claire Rossignol (CEFE) and Bruno Spataro (LBBE).

Simon Chamaillé-Jammes is a research director at the CNRS and specializes in population dynamics and behavioral ecology.

Vincent Miele is a research engineer at the CNRS and specializes in developing deep-learning-based computer vision techniques in ecology.

Gaspard Dussert is a PhD student in machine learning who develops further the methodological approaches underlying the DeepFaune models.

Claire Rossignol is a machine learning engineer who is assisting with data management pipelines, hired thanks to the BIODIVERSA BigPicture project.

Bruno Spataro is a research engineer at the CNRS and manages data storage and computation services for the PRABI-LBBE data center.